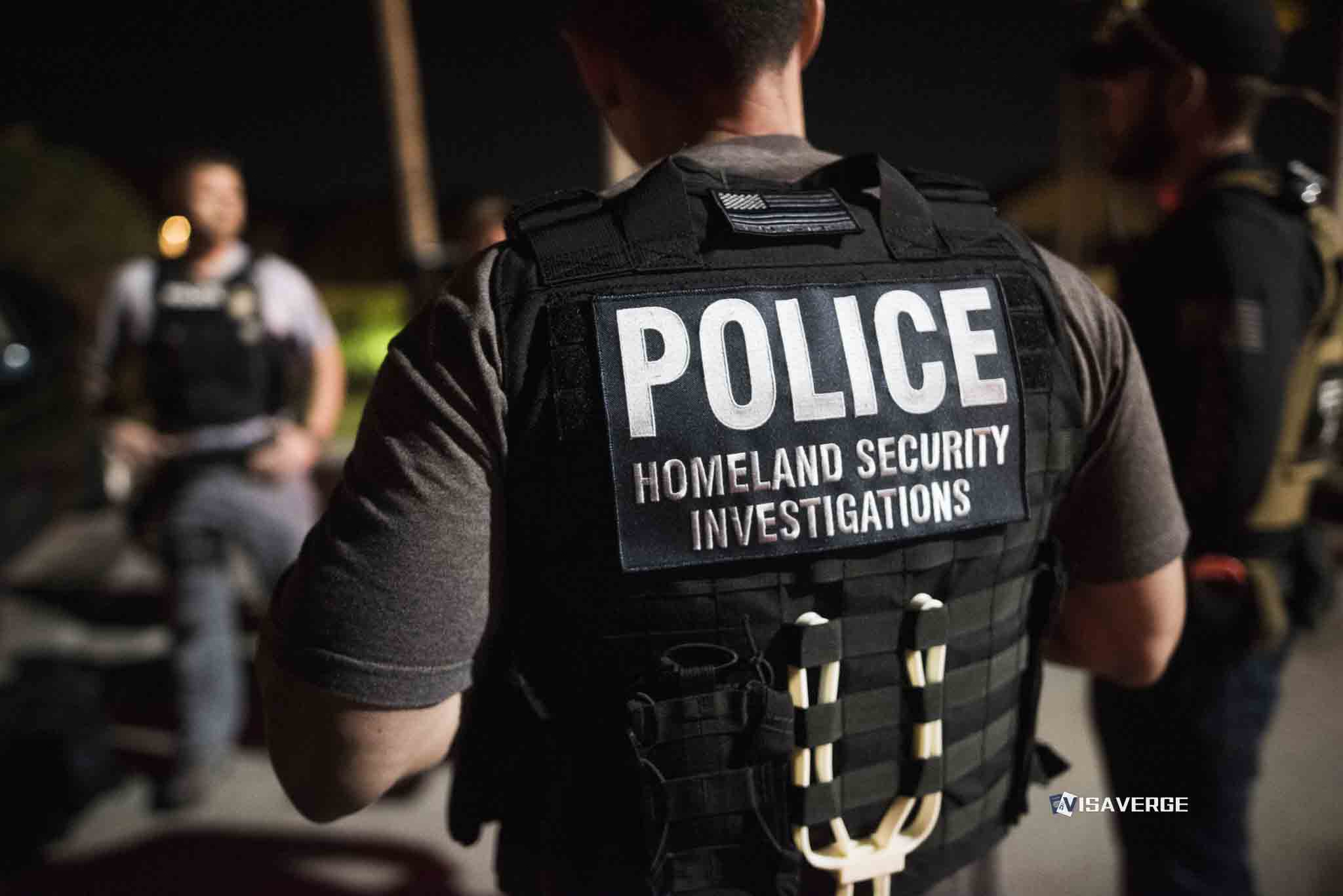

(UNITED STATES) Immigration and Customs Enforcement has secured a $5.7 million, five-year contract for Zignal Labs’ AI-driven social media surveillance platform, a move that expands the agency’s ability to monitor public online posts and tie them to enforcement leads. The deal, awarded through government reseller Carahsoft Technology and disclosed in September 2025, gives ICE’s Homeland Security Investigations unit access to “real-time data analysis for criminal investigations” through September 2028. The platform’s scale and automation mark a sharp step up from earlier tools ICE used to scan social media.

Zignal Labs processes more than 8 billion posts per day in 100+ languages, according to product materials cited by civil liberties groups and government procurement records. The system applies machine learning, computer vision, and optical character recognition to parse text, images, and videos. It can pull metadata such as geolocation, analyze faces and logos in footage, and map connections among people and organizations.

The platform also generates “curated detection feeds,” automated lists that flag accounts or content likely relevant to a given target set. Critics say these can function as watchlists built from public speech.

According to analysis by VisaVerge.com, this acquisition fits a broader pattern: ICE has steadily expanded its digital surveillance toolkit while seeking more staff to watch platforms like Facebook, Instagram, TikTok, X, and YouTube around the clock. Advocates say this trend risks chilling online speech by immigrants and U.S. citizens alike. ICE maintains the aim is to support criminal probes and national security, but the lack of public rules around how agents select targets, store data, and share results has fueled concern.

Contract Details and Capabilities

The Zignal Labs award was first recorded in late September with a performance window through 2028, confirming direct access for ICE after earlier licenses were tied to other Department of Homeland Security components. DHS first procured Zignal for the Secret Service in 2019. Now, ICE joins a list of U.S. government users that includes the Pentagon and other defense entities.

Zignal Labs has also been used by the Israeli military for tactical intelligence in Gaza, highlighting the platform’s reach and dual-use potential in both military and law enforcement settings.

Key advertised features

- Global-scale processing: billions of posts in dozens of languages to surface trends quickly.

- Image and video parsing: extracts text, identifies symbols, and links visual cues to places or people.

- Social graph mapping: shows links between accounts and communities.

- Automated detection feeds: filter and rank content for investigators.

ICE previously relied on tools like ShadowDragon and Babel X, but this purchase appears to raise both scale and sophistication. The agency recently added a separate $7 million contract for “skip tracing services,” underscoring a wider buildout of surveillance and data-mining capacity.

Technical and operational concerns

Civil liberties advocates warn that AI systems trained on huge data streams can:

– Produce false positives.

– Misread sarcasm and contextual nuance.

– Misclassify dialects or minority languages, especially where training data are sparse.

If such feeds are used to steer immigration enforcement, errors could have serious consequences for people with limited resources to challenge government claims. Labor unions have sued over federal social media surveillance they describe as “viewpoint-driven,” arguing it punishes lawful expression and deters organizing.

Civil Liberties and Oversight Questions

Groups including the ACLU and the Surveillance Technology Oversight Project say ICE’s social media surveillance risks violating free speech protections and privacy rights by enabling broad, suspicionless monitoring. They argue automated watchlists built from public chatter can become tools to filter by ideology or activism rather than by credible criminal evidence.

“DHS should not be buying surveillance tools that scrape our social media posts off the internet and then use AI to scrutinize our online speech,” — Patrick Toomey, ACLU.

Will Owen of the Surveillance Technology Oversight Project called the expansion an assault on democratic norms fueled by algorithms.

As of late October 2025, there is no public evidence of independent audits that measure the system’s accuracy, fairness, or compliance with civil rights rules in the immigration context. That gap troubles attorneys who represent noncitizens. They note that deportation cases often move quickly, with limited discovery into how an investigation began.

If a feed flagged someone’s account because they attended a protest, used certain hashtags, or shared a video, defense counsel may never learn enough about the algorithm to challenge its assumptions. That risk increases when social media surveillance is combined with other databases such as license-plate readers or commercial data brokers.

Arguments in favor

Supporters of stronger digital tools counter:

– Public posts are not private, and agents need to keep pace with criminal networks that recruit, coordinate, and launder money online.

– Social media surveillance can help identify trafficking victims and dismantle smuggling rings.

The policy question becomes how to limit a powerful system so it targets serious crime without sweeping up lawful speech or exposing people to wrongful targeting. For now, there’s little public detail on ICE’s internal policies for Zignal Labs, including:

– How long data is stored.

– Who can access the feeds.

– Written minimization or audit procedures.

Political and Community Impact

The political fight over ICE—already intense under President Trump and continuing under President Biden—has grown alongside these tools. Some Democrats have launched trackers to catalog alleged misconduct, while allies of stricter enforcement argue that digital monitoring is essential to public safety.

For immigrant communities, the impact is immediate and personal. People ask whether liking a post, sharing a protest flyer, or commenting on a video could put them on an automated list. Advocacy lawyers advise clients to be cautious with public profiles, while stressing that lawful speech remains protected.

Yet the fear is that AI-driven triage can harden bias, pushing certain communities into more scrutiny while their voices are discounted as noise or flagged as threats. Experts suggest a few core policy questions:

- What is the threshold for agents to act on a social media flag?

- Are there written, public standards for minimizing collection of First Amendment-protected speech?

- Who audits outcomes for bias across language, race, nationality, and political viewpoint?

- How long is data retained, and is it shared with other agencies or private partners?

So far, answers are sparse. ICE has not publicly detailed the rules of the road for Zignal Labs beyond standard references to criminal investigations. Without clear limits, civil liberties groups worry that social media surveillance could drift from specific leads to broader scanning that chills civic participation—from protests to campus organizing.

Global Context and Industry Shift

Zignal Labs, founded in 2011, pivoted from public relations and political communications to defense and intelligence clients by 2021. Its work with the U.S. Marines, Pentagon entities, and the Israeli military demonstrates how private-sector analytics can move quickly into security missions.

In immigration enforcement, that shift has been underway since the late 2010s and accelerated under President Trump, with continuity into 2025. The United States 🇺🇸 now sits at the center of a worldwide market for state-run data mining, where line agents can monitor trends in real time while relying on machine-generated scores to prioritize targets.

Practical Advice for the Public

Practical steps for people concerned about misuse include:

– Set stricter privacy controls on social accounts.

– Avoid posting sensitive details publicly.

– Keep records of potential misidentification or problematic interactions.

– Community groups should document cases where online expression appears to trigger enforcement to build evidence for lawmakers and courts.

– Lawyers suggest immigrants consult counsel before discussing sensitive matters online during pending cases.

Advocates also caution against giving up public speech: rights do not vanish because a tool can monitor them.

Options for Congress and Oversight

Possible legislative and oversight responses:

– Mandate external audits of these systems.

– Require public reporting on enforcement actions tied to social media.

– Set bright-line bans on monitoring protected categories of speech.

– Limit retention periods, restrict data sharing, and require disclosure to defendants when an algorithmic flag was used.

These steps would not end surveillance, but they would bring sunlight and a measure of due process to a powerful and growing system.

ICE’s parent department points to its mission and the need to adapt to the digital age. Yet as long as policies remain opaque, doubts will persist. The fight now moves to courtrooms and oversight committees, where the balance between safety and freedom is tested in real cases, not theory. Until then, Zignal Labs will keep scanning the world’s feeds, sorting signals from noise, and pushing questions about power, error, and speech into the center of U.S. immigration enforcement.

For readers seeking official context on the unit deploying this system, ICE Homeland Security Investigations provides an overview of its mission and authorities on its website. See the agency’s page at ICE Homeland Security Investigations.

This Article in a Nutshell

ICE’s Homeland Security Investigations awarded a $5.7 million, five-year contract in September 2025 to Zignal Labs for an AI-driven social media surveillance platform. The system ingests over 8 billion posts daily in more than 100 languages and uses machine learning, computer vision, and OCR to extract metadata, geolocation, faces, logos, and to map social connections. Zignal generates automated curated detection feeds that can flag accounts for investigators; critics say these function like watchlists. Civil liberties groups — including the ACLU and Surveillance Technology Oversight Project — warn of chilling effects, bias, false positives, and lack of transparency. No public independent audits or clear ICE policies on retention, access, minimization, or auditing were apparent as of October 2025. Supporters argue public posts are not private and the tools aid criminal investigations and victim identification. Policy options suggested include external audits, public reporting, retention limits, and disclosure to defendants when algorithmic flags are used.